2025-12-24

2025-12-24

0

0

Today marks a pivotal moment for the future of computing and a landmark event for our fund. We are thrilled to announce that our portfolio company, Groq, has entered into a definitive agreement to be acquired by NVIDIA.

Our investment in Groq has delivered a 6x MOIC in just 12 months. This exceptional outcome is not a product of market timing, but a validation of a core thesis we have championed from the beginning, the irreversible primacy of infrastructure in the age of AI.

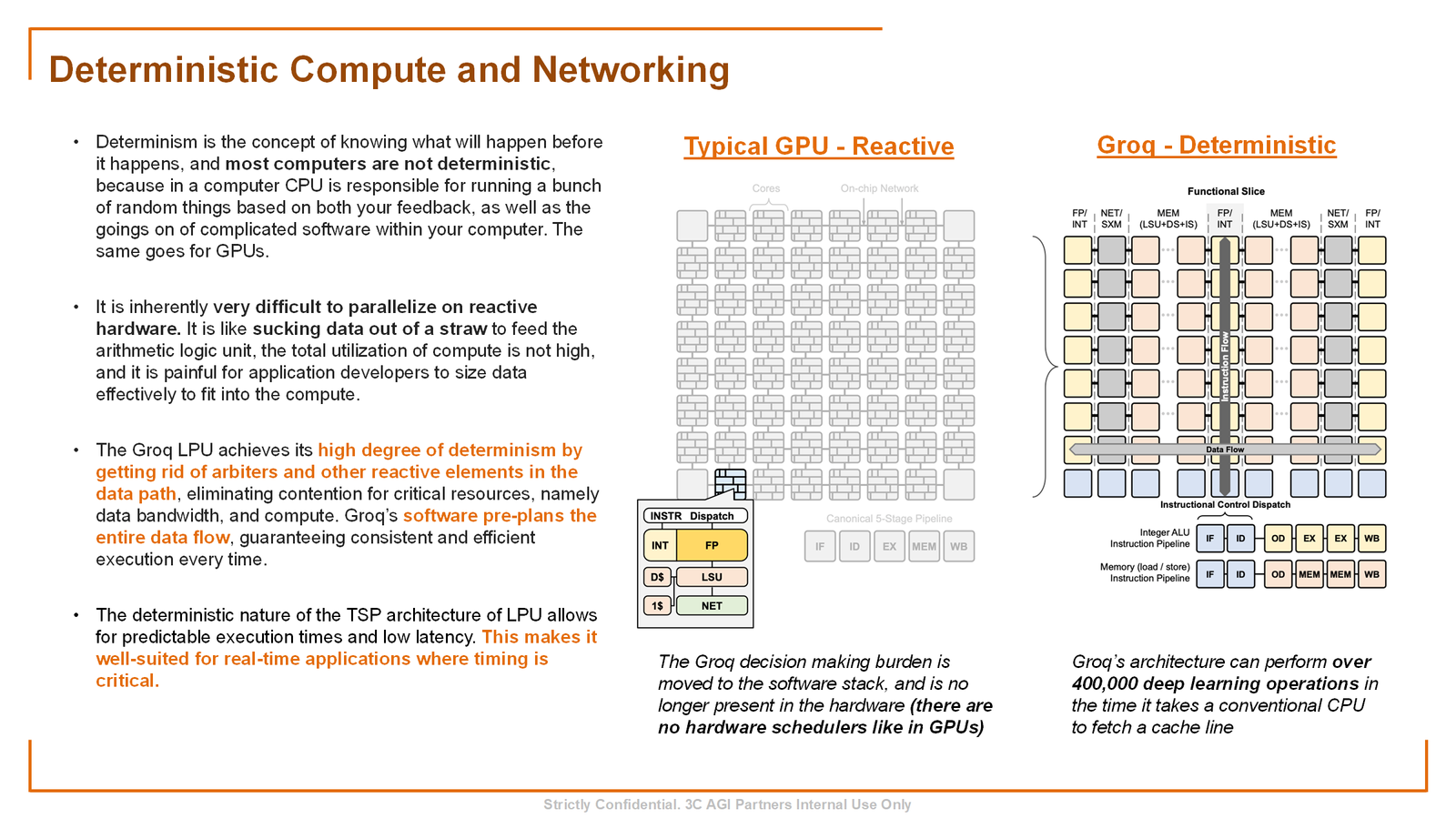

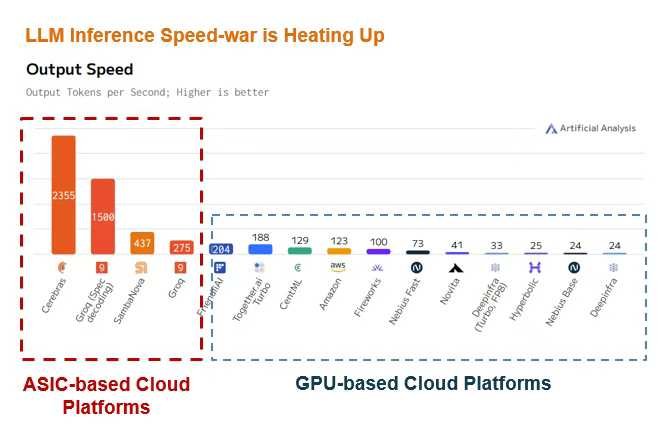

When we invested, we saw a team tackling one of the most profound bottlenecks in modern computing: deterministic, low-latency inference. While the world chased floating-point teraflops for training, Groq's LPU architecture was engineered for the sheer velocity of execution, achieving sub-1 millisecond latency per token in large language model inference. In benchmarks, Groq's systems demonstrated the ability to serve Llama 3 70B at over 300 tokens per second, a real-time performance that redefines user experience.

Why NVIDIA Acquired Groq? Securing the Inference Frontier

NVIDIA's dominance in AI training, with its Hopper and Blackwell GPUs commanding an estimated 90%+ market share is unquestioned. However, the inference landscape is more fragmented and poses a different set of challenges: cost, latency, and power efficiency at scale.

The Latency Crown - Groq's hardware and software stack removes the memory bottleneck of traditional GPU architectures. Its unique deterministic execution model means performance is predictable and blazingly fast. For real-time AI—think interactive agents, robotics, and next-gen search—this is a prerequisite. NVIDIA now instantly owns the state-of-the-art in low-latency inference hardware.

The Software Moat Extension - NVIDIA’s CUDA is the industry’s most unassailable software moat. Groq’s compiler-first approach represents one of the few truly novel and mature alternatives developed outside the CUDA ecosystem. Acquiring this talent and IP allows NVIDIA to fortify its own inference software offerings and neutralize a potential long-term architectural threat.

The Systems Play - Groq’s approach as a systems company aligns perfectly with NVIDIA’s own pivot to selling full systems (DGX, HGX). The numbers tell the story: the estimated $400 billion data center GPU market is increasingly shifting towards full-system sales, which command higher margins and deeper customer lock-in.

The Bottom Line - This is a technology and talent acquisition that seals NVIDIA’s hegemony across the entire AI workload spectrum—from training to the most demanding inference tasks. Also, when you are sitting on 200bn FCF, 20bn acquisition is not a hefty price tag.

Industry Implications: Waves Across the Silicon Seas

For HBM - Groq’s architecture is famously SRAM-based, not HBM-dependent. This acquisition validates that the future of AI silicon is architecturally diverse. While HBM demand will remain colossal for training (DRAM bit growth for AI is still projected at (~45% CAGR), alternative architectures for specific workloads are now validated at the highest level. Expect intensified investment in memory innovation beyond the HBM scaling roadmap.

For Other AI Chip Startups & The Cerebras Moment - This is a defining event for the sector.

The Bar is Raised - Pure-play "faster GPU" clones are now irrelevant. NVIDIA has co-opted the leading alternative architecture for inference. To compete, startups must prove a *fundamental* architectural advantage for a specific, valuable workload.

Implications for Cerebras - For our other seminal portfolio company, Cerebras, which is on the path to the public markets, this acquisition is a powerful tailwind. Cerebras represents the other end of the architectural spectrum: the world's largest chip, the Wafer-Scale Engine (WSE-3), designed to demolish the biggest training and frontier-scale scientific inference problems. NVIDIA's move to consolidate the inference space sharpens the focus and elevates the valuation for a pure-play, architectural leader in giant-scale training. Cerebras's publicly disclosed results—training a 1 trillion parameter model on a single rack, a task requiring thousands of GPUs—cements its unique position. As Groq's acquisition validates specialized inference, Cerebras's impending listing will validate specialized large-scale training. The market is now sophisticated enough to recognize and value these distinct, non-competing architectural paradigms.

Our Investment Thesis: Infra First - A Thesis Validated

The Groq outcome, and the impending Cerebras chapter, are dual pillars of our fund's mantra: Infra First.

We believe the value in a technological paradigm shift accrues *upwards* from the foundational layers. Before killer apps become ubiquitous, the pipes, plumbing, and power must be built. Our investment map targets the picks-and-shovels of the AI revolution:

Compute fabric, storage & memory hierarchy, and networking & interconnect

Alternative data center solutions – in space!

Sustainable energy in the form of nuclear fusion

By focusing on infrastructure, we capture value that is non-discretionary. Whether the winning application is generative video, scientific discovery, or autonomous agents, they all demand more performance, lower latency, and greater efficiency. They all run on infra.

Our strategy of identifying and backing foundational, paradigm-shifting teams at the silicon level is the highest-impact approach to venture in this era.