2026-02-14

2026-02-14

0

0

We're at an Inflection Point in AI Compute

For the past decade, when we talked AI hardware, the conversation always started with training. Everyone obsessed over who could blast a trillion-parameter model from zero to hero the fastest. GPUs ruled that world—flexible, ecosystem-rich, adaptable to every algorithmic twist and turn.

But look at the signals: Nvidia's Groq “acquire-hire”, OpenAI's $10B+, 750MW deal with Cerebras, and the subsequent drop of GPT-5.3-Codex-Spark, the message is loud and clear - the battlefield is shifting from training to inferencing.

Reuters: In mid-January 2026, OpenAI signed a three-year, 750-megawatt capacity agreement with Cerebras worth over $10 billion.

Source: Company

Here's the kicker: AI's primary customer isn't human anymore, it's other AI. When an agent needs to fire off ten or hundred model calls behind the scenes to complete one task, the bottleneck stops being "does it compute correctly?" and becomes "how fast and cheap can it compute correctly?"

Get that, and you'll understand why OpenAI is betting on Cerebras' decidedly non-mainstream architecture. This is a technological fork in the road.

Codex-Spark: Built for Speed, Not Just Smarts

Let's talk product. GPT-5.3-Codex-Spark is OpenAI's admission that one size doesn't fit all. It's a slimmed-down Codex variant, laser-focused on real-time software development, running on Cerebras' WSE-3—a chip that treats latency as a first-class citizen.

The numbers: 3-4x faster than GPT-5.1-Codex-mini on SWE-Bench Pro and Terminal-Bench 2.0, and up to 15x faster than the flagship GPT-5.3-Codex. Context window hits 128K, with throughput clocking 1,000+ tokens per second. In plain English? You're typing, and the model's already done before you blink.

OpenAI is essentially splitting Codex into two modes: "deep reasoning + long-horizon execution" versus "real-time collaboration + rapid iteration." Spark owns the latter—perfect for surgical code edits, logic refactoring, UI tweaks, style fixes. The kind of stuff where perceptual latency kills productivity. External evals consistently note: probably the first AI coding assistant that actually types faster than you think.

Cerebras' Wafer-Scale Architecture: A Technical Gut Punch

OpenAI is explicit about why: WSE-3 provides a "latency-first" serving layer, already integrated into their production inference stack.

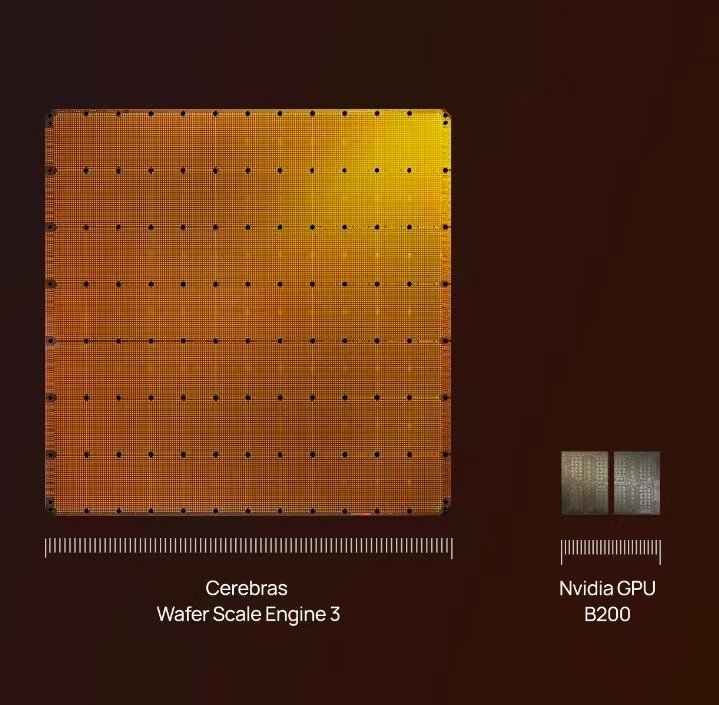

The WSE-3 is a monster. We're talking a full 300mm wafer—uncut, unchopped—functioning as one logical processor. 4 trillion transistors, 900,000 AI-optimized cores, 44GB of on-chip SRAM, all stitched together with a 2D mesh interconnect delivering 21 petabytes per second of memory bandwidth. That's 7,000x the H100's bandwidth, staying entirely on-silicon. The compiler essentially unrolls Transformer layers across this mesh, keeping weights and activations local. No HBM fetches, no all-reduce across NVLink, no "hurry up and wait" for memory. This is dataflow architecture done right—compute happens where data lives. OpenAI already stress-tested this with gpt-oss-120B, hitting ~3,000 tokens/second—a world record driven by the simple fact that the model lives on one piece of silicon. No network overhead, no synchronization hell, just pure, uncut throughput.

From "Humans Using AI" to "AI Using AI": The Latency Multiplier Effect

If you're just measuring "human-perceptible" latency—1000 tok/s versus 200 tok/s—you might think, “cool, its faster”. But flip the lens to agentic workflows, and the math explodes. Modern AI agents aren't monolithic; they're graphs of models—orchestration layers decomposing tasks, code models writing scripts, retrieval models fetching context, verification models checking work. Every edge in that graph is a model call. Total latency is the critical path sum of all those hops.

Cut single-call latency by 50%, and a 20-hop agent chain runs 2x faster end-to-end. And critically, AI-to-AI call frequency dwarfs human interaction rates by orders of magnitude. We're talking thousands of internal calls per user-facing response.

Codex-Spark is the Trojan Horse here. It's not just a coding assistant, it's a real-time AI-to-AI hub. You type your intent in the IDE; Spark parses context, generates patches, refactors UI. But behind the scenes? It's firing off calls to other models for long-horizon tasks, static analysis, security scanning, auto-testing. The human sees a cursor moving; the system sees a cascade of model-to-model chatter.

Once you accept that advanced AI systems burn most of their compute on internal model calls, not final NL generation, the infrastructure question becomes: who owns the lowest token cost per watt per dollar at scale? That's the future pricing power of AI infrastructure.

Cerebras' wafer-scale play is architecturally purpose-built for this "AI-serving-AI" world. It's not trying to be a GPU replacement for everything. It's surgically optimized for large-model inference - long context, high throughput, interactive latency. The WSE-3 shoves massive compute and SRAM onto one die to maximize data locality—weights, KV cache, activations all within millimeters. The system-level result? Higher "effective tok/s per watt per dollar" with tighter, more predictable latency distributions.

OpenAI's $10B Vote of Confidence

The sequencing here is telling. OpenAI didn't lead with some "biggest, baddest" general model on Cerebras, they led with Codex-Spark, a product where real-time performance is the entire value prop. That's a deliberate demo: inference isn't training's sidekick anymore; it's worth its own dedicated hardware track. From an investment lens, this is OpenAI putting real production traffic behind Cerebras' WSE architecture—a long-term, scaled validation that wafer-scale isn't just benchmark theater, but can shoulder massive interactive workloads.

Who's Laying the Foundations for the Next Decade of Compute?

Put the pieces together: 750MW, $10B+ locks Cerebras into OpenAI's core infrastructure through 2028. GPT-5.3-Codex-Spark demos what wafer-scale inference unlocks for real-time products. This isn't a supplier deal—it's a strategic co-architecture of the future AI stack.

At this point, investors should be asking themselves, "who's building the substrate for an inference-dominated, agent-heavy world?"

Betting on inference-first architectures—especially heterogenous plays like Cerebras that push wafer-scale to its extreme, is, in my view, one of the highest-conviction positions in AI today.

The train-to-inference shift is here, and the silicon winners will be those who built for AI serving AI, not AI serving humans.